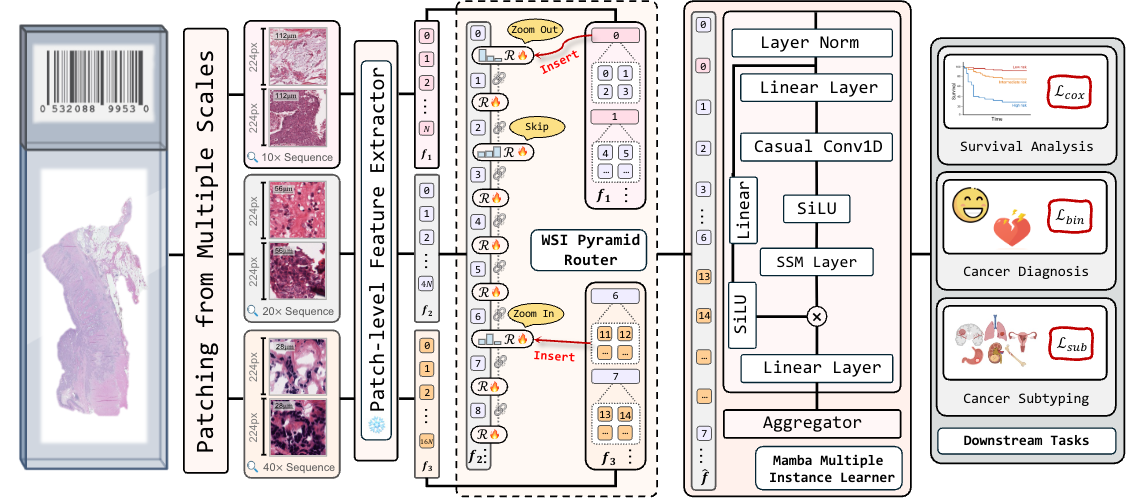

Ph.D. Candidate at HKUST

Ph.D. Candidate at HKUSTI am a Year-2 Ph.D. candidate at The Hong Kong University of Science and Technology (HKUST) under the supervision of Prof. Xiaomeng LI. My research lies in the interdisciplinary areas of artificial intelligence and medical image analysis, aiming at advancing healthcare with machine intelligence. Specifically, I am currently working on advancing Neural Science with AI solutions.

Previously, I received B.Eng degree in Software Engineering at Sichuan University, and closely collaborated with Prof. Yan Wang as an undergraduate research intern.

Warning

Problem: The current name of your GitHub Pages repository ("Solution: Please consider renaming the repository to "

http://".

However, if the current repository name is intended, you can ignore this message by removing "{% include widgets/debug_repo_name.html %}" in index.html.

Action required

Problem: The current root path of this site is "baseurl ("_config.yml.

Solution: Please set the

baseurl in _config.yml to "Education

-

The Hong Kong University of Science and TechnologyDepartment of Electronic and Computer Engineering

The Hong Kong University of Science and TechnologyDepartment of Electronic and Computer Engineering

Ph.D. CandidateSep. 2023 - present -

Sichuan UniversitySchool of Software Engineering

Sichuan UniversitySchool of Software Engineering

B.Eng in Software EngineeringSep. 2019 - Jul. 2023 -

National University of SingaporeSchool of Computing

National University of SingaporeSchool of Computing

SoC Research Summer WorkshopJun. 2020 - Sep. 2020

Experience

-

Sichuan UniversityResearch Intern

Sichuan UniversityResearch Intern

Supervisor: Prof. Yan WANGSep. 2020 - Jul. 2022

Honors & Awards

-

Best TA Award (10,000 HKD, Top 1%) | HKUST2025

-

Postgraduate Studentship (18,000 HKD per month) | HKUST2023

-

RedBird PhD Scholarship (82,000 HKD, Top 1%) | HKUST2023

-

Outstanding Graduate (Top 3%) | SCU2023

-

First Prize (Top < 2%) of Research Summer Workshop | NUS2020

News

Publications (view all )

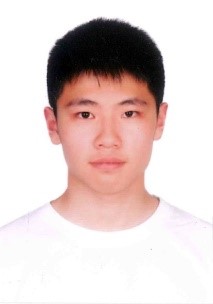

Read like a Pathologist: Enhancing Mamba with Pyramid Router for Whole Slide Image Analysis

Qixiang ZHANG, Yi LI, Tianqi XIANG, Haonan WANG, Xiaomeng LI

Under review 2025

WSI Analysis

Read like a Pathologist: Enhancing Mamba with Pyramid Router for Whole Slide Image Analysis

Qixiang ZHANG, Yi LI, Tianqi XIANG, Haonan WANG, Xiaomeng LI

Under review 2025

WSI Analysis

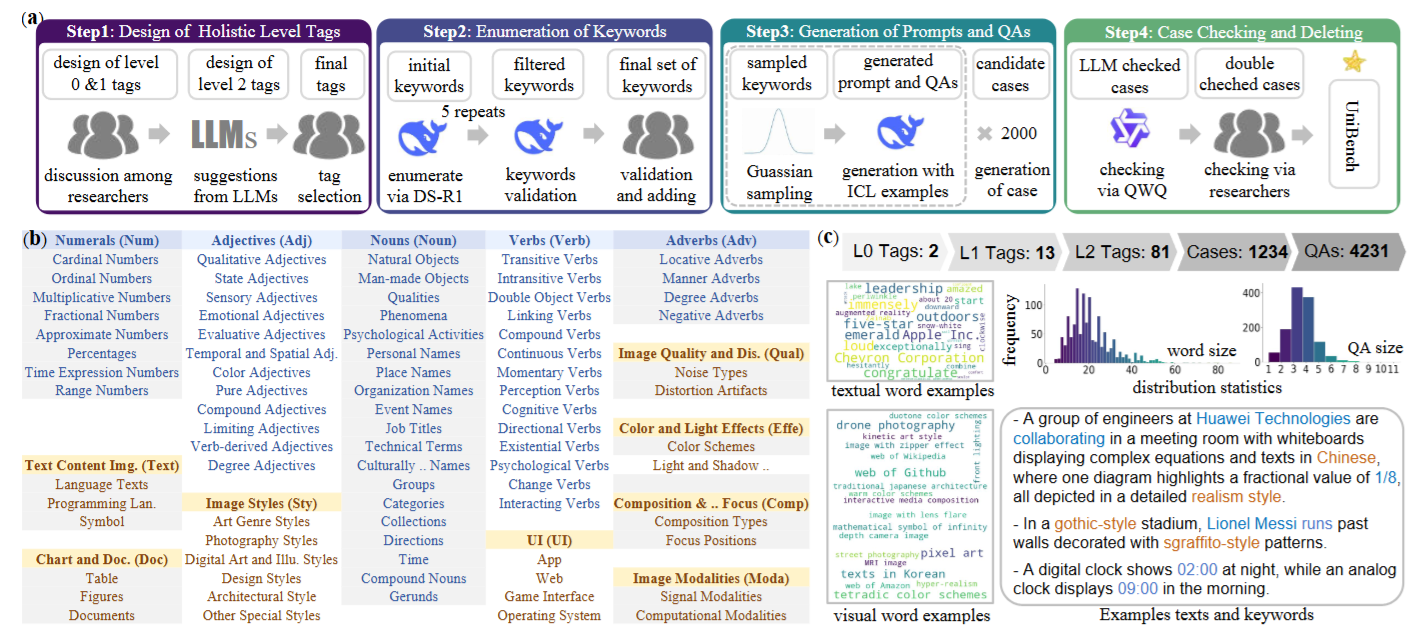

UniEval: Unified Holistic Evaluation for Unified Multimodal Understanding and Generation

Yi LI, Haonan Wang, Qixiang Zhang, Boyu Xiao, Chenchang Hu, Hualiang Wang, Xiaomeng Li

Submitted to Annual Conference on Neural Information Processing Systems (NeurIPS) 2025

[Preprint]

[Code]

Benchmark MLLM

UniEval: Unified Holistic Evaluation for Unified Multimodal Understanding and Generation

Yi LI, Haonan Wang, Qixiang Zhang, Boyu Xiao, Chenchang Hu, Hualiang Wang, Xiaomeng Li

Submitted to Annual Conference on Neural Information Processing Systems (NeurIPS) 2025

[Preprint]

[Code]

Benchmark MLLM

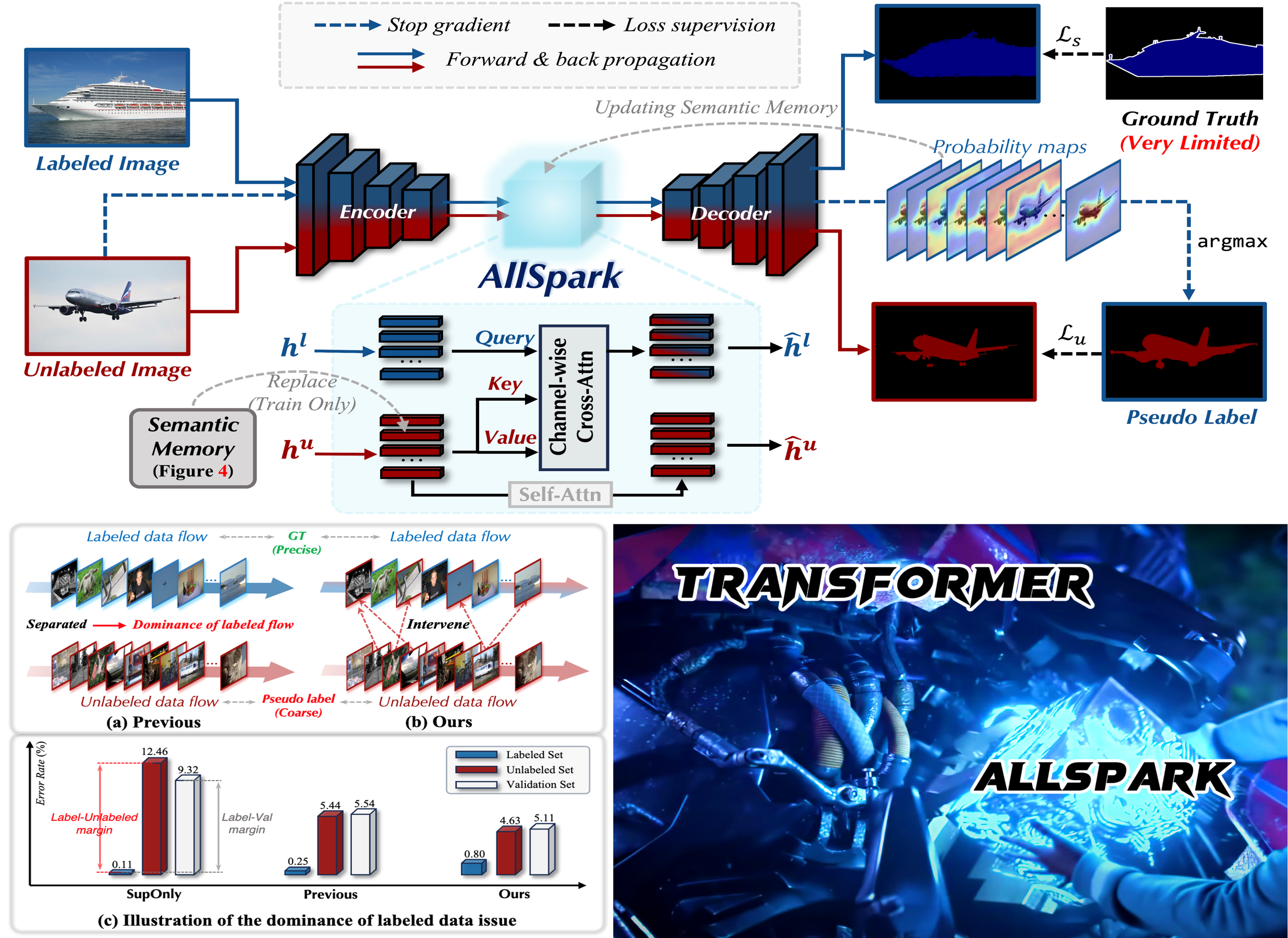

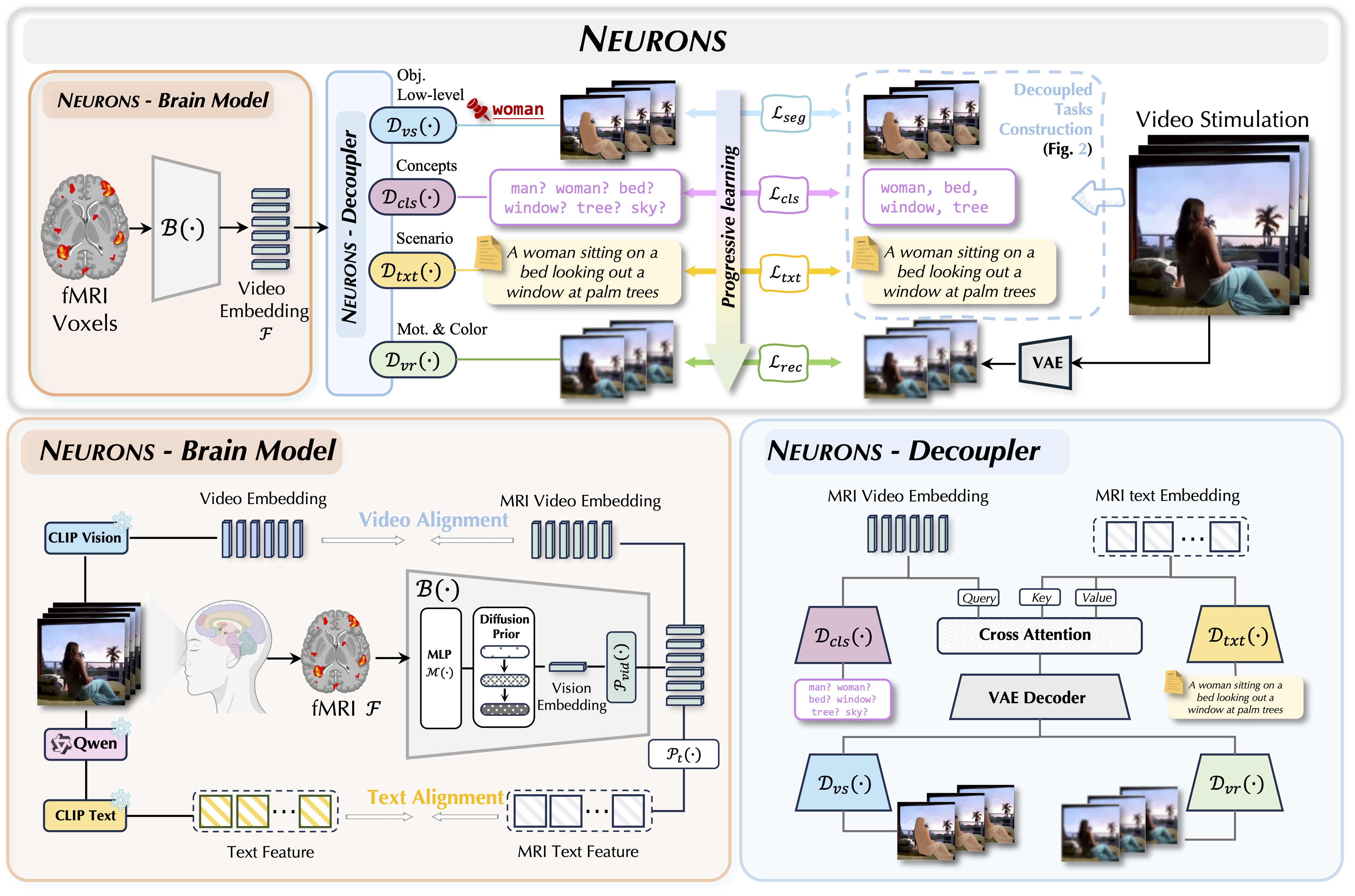

Neurons: Emulating the Human Visual Cortex Improves Fidelity and Interpretability in fMRI-to-Video Reconstruction

Haonan WANG*, Qixiang ZHANG*, Lehan WANG, Xuanqi HUANG, Xiaomeng LI (* equal contribution)

International Conference of Computer Vision (ICCV) CCF-A 2025

[Preprint]

[Code]

AI for Neural Science

Neurons: Emulating the Human Visual Cortex Improves Fidelity and Interpretability in fMRI-to-Video Reconstruction

Haonan WANG*, Qixiang ZHANG*, Lehan WANG, Xuanqi HUANG, Xiaomeng LI (* equal contribution)

International Conference of Computer Vision (ICCV) CCF-A 2025

[Preprint]

[Code]

AI for Neural Science

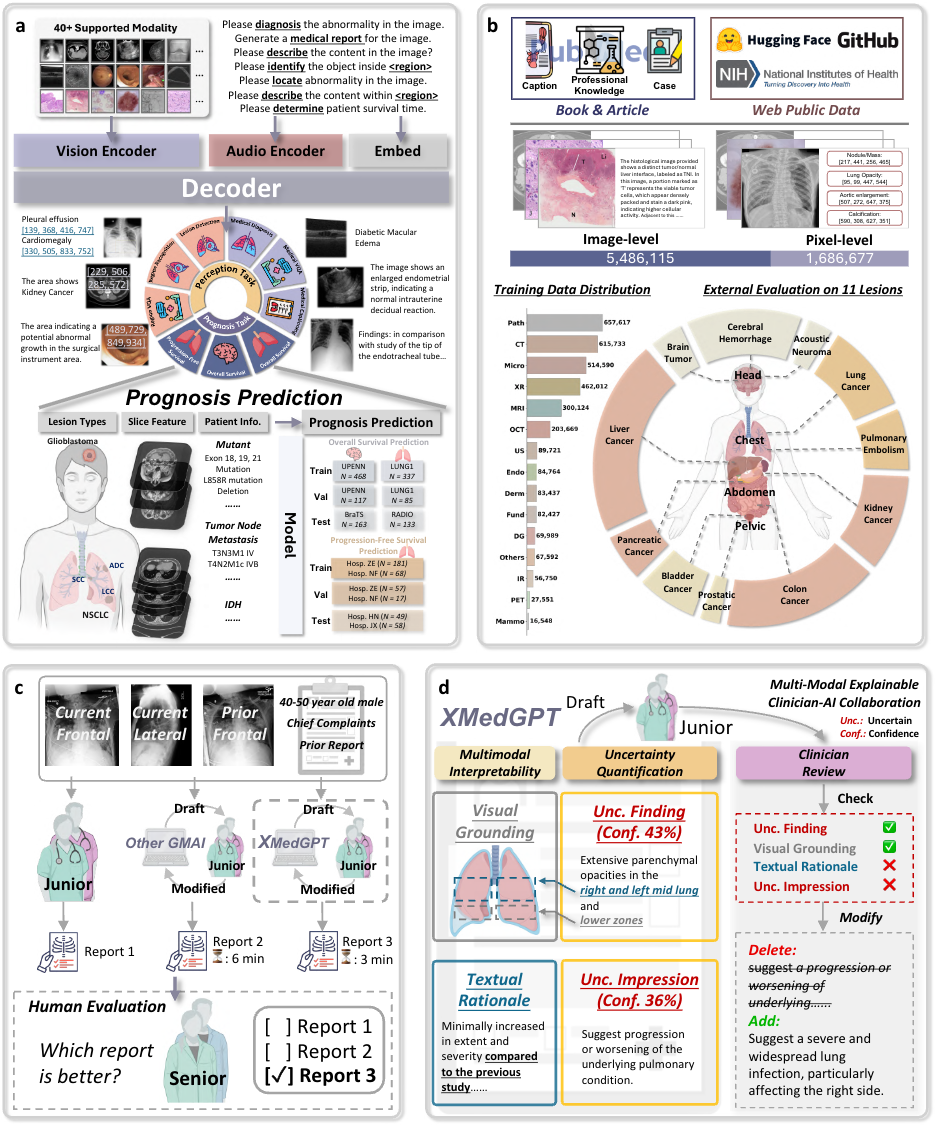

Multi-Modal Explainable Medical AI Assistant for Trustworthy Human-AI Collaboration

Honglong Yang, Shanshan Song, Yi Qin, Lehan Wang, Haonan Wang, Xinpeng Ding, Qixiang Zhang, Bodong Du, Xiaomeng Li

Under Review 2025

Generalist Medical AI systems have demonstrated expert-level performance in biomedical perception tasks, yet their clinical utility remains limited by inadequate multi-modal explainability and suboptimal prognostic capabilities. Here, we present XMedGPT, a clinician-centric, multi-modal AI assistant that integrates textual and visual interpretability to support transparent and trustworthy medical decision-making. XMedGPT not only produces accurate diagnostic and descriptive outputs, but also grounds referenced anatomical sites within medical images, bridging critical gaps in interpretability and enhancing clinician usability. The model achieves an Intersection over Union of 0.703 across 141 anatomical regions, and a Kendall’s tau-b of 0.479, demonstrating strong alignment between visual rationales and clinical outcomes. In survival and recurrence prediction, it surpasses prior leading models by 26.9%...

[Preprint] MLLM Benchmark

Multi-Modal Explainable Medical AI Assistant for Trustworthy Human-AI Collaboration

Honglong Yang, Shanshan Song, Yi Qin, Lehan Wang, Haonan Wang, Xinpeng Ding, Qixiang Zhang, Bodong Du, Xiaomeng Li

Under Review 2025

Generalist Medical AI systems have demonstrated expert-level performance in biomedical perception tasks, yet their clinical utility remains limited by inadequate multi-modal explainability and suboptimal prognostic capabilities. Here, we present XMedGPT, a clinician-centric, multi-modal AI assistant that integrates textual and visual interpretability to support transparent and trustworthy medical decision-making. XMedGPT not only produces accurate diagnostic and descriptive outputs, but also grounds referenced anatomical sites within medical images, bridging critical gaps in interpretability and enhancing clinician usability. The model achieves an Intersection over Union of 0.703 across 141 anatomical regions, and a Kendall’s tau-b of 0.479, demonstrating strong alignment between visual rationales and clinical outcomes. In survival and recurrence prediction, it surpasses prior leading models by 26.9%...

[Preprint] MLLM Benchmark

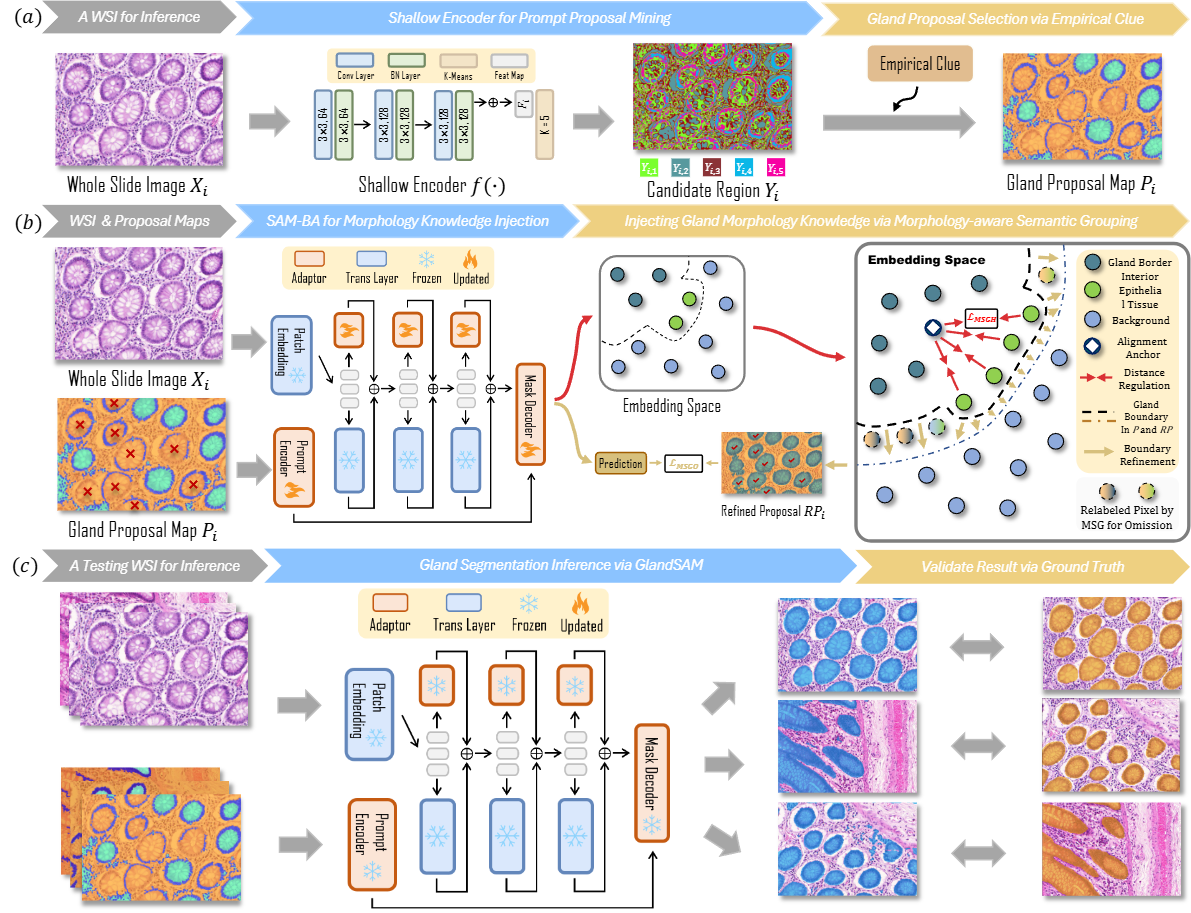

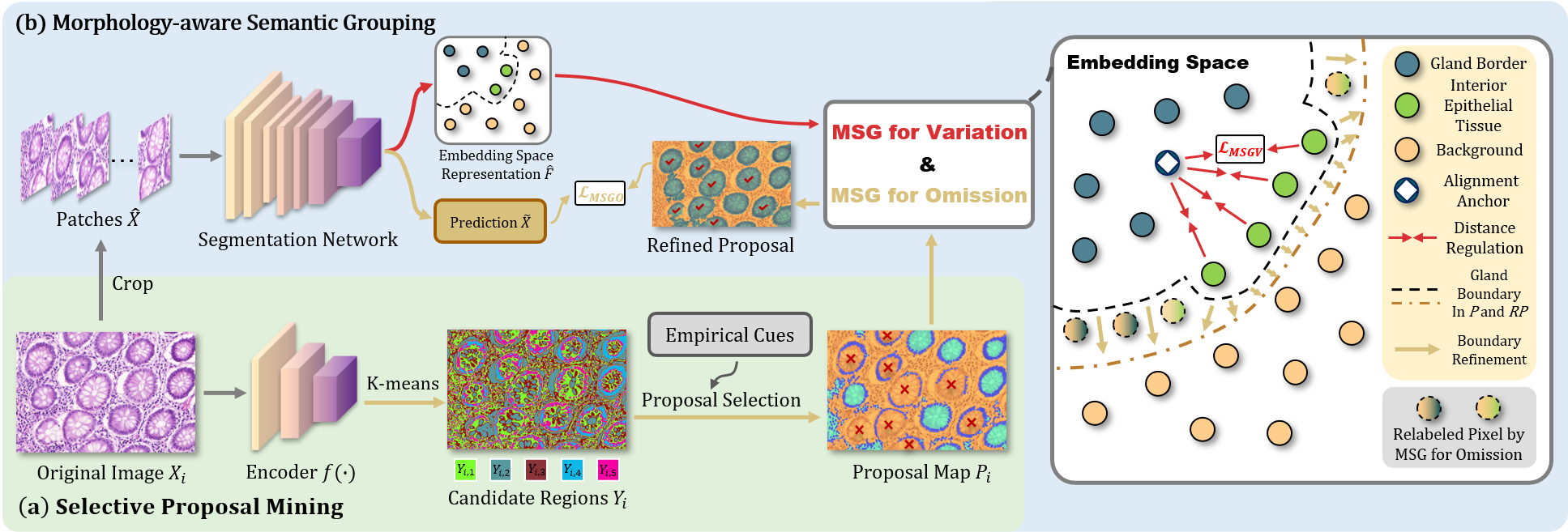

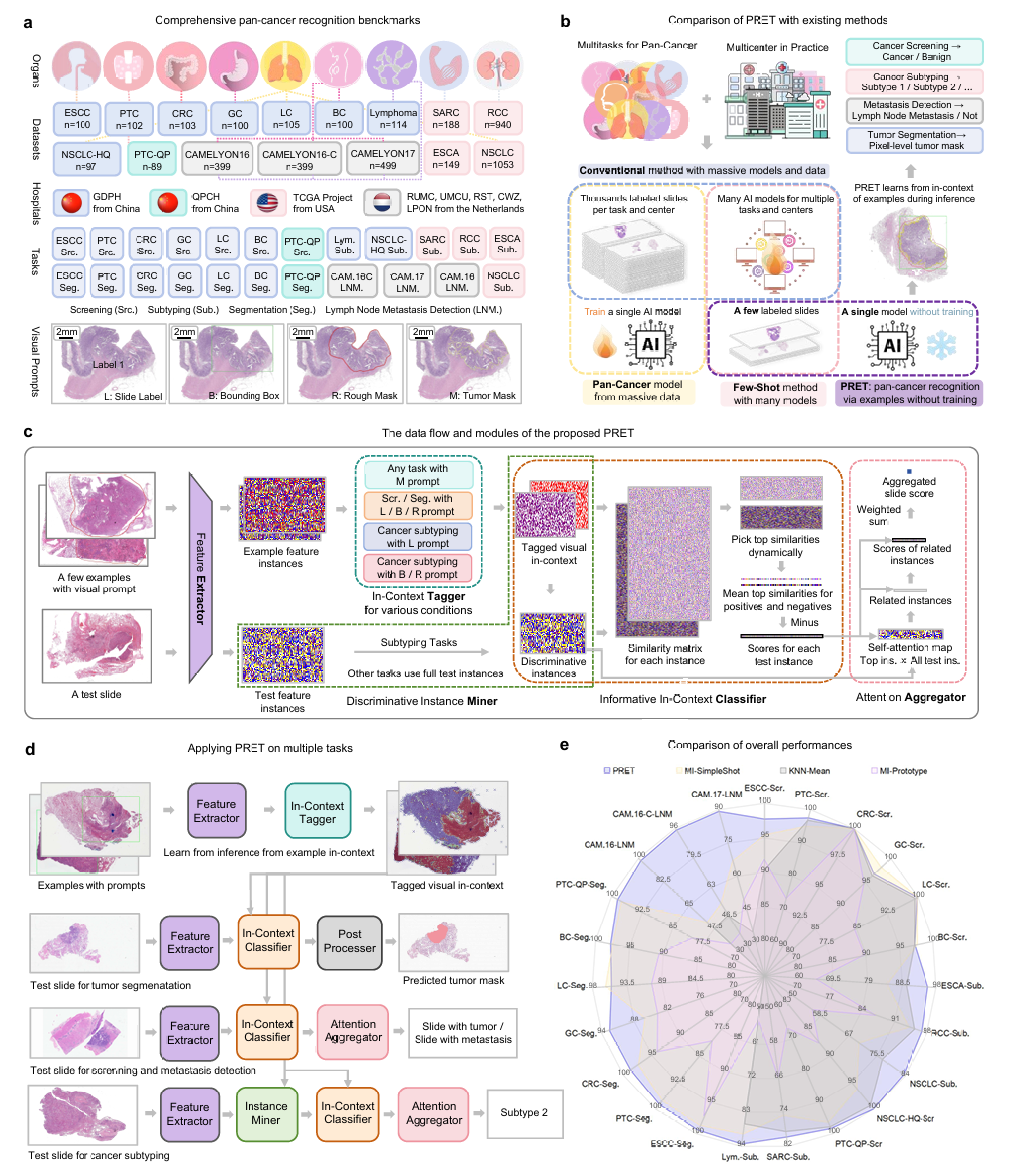

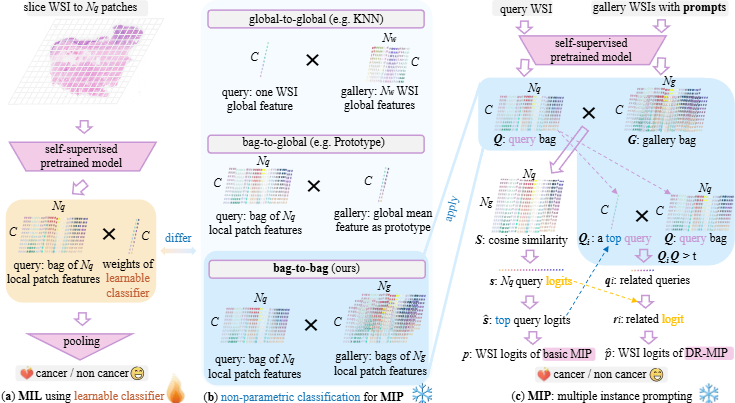

PRET: Achieving Pan-Cancer Recognition via a Few Examples Without Training

Yi Li, Ziyu Ning, Tianqi Xiang, Qixiang Zhang, Yi Min, Zhihao Lin, Feiyan Feng, Baozhen Zeng, Xuexia Qian, Lu Sun, Jiace Qin, Ling Xiang, Chao Fan, Tian Qin, Qian Wang, Xiu-Wu Bian, Qingling Zhang, Xiaomeng Li

Submitted to Nature Cancer Under Review. 2025

...In this paper, we introduce a novel paradigm, Pan-cancer Recognition via Examples without Training (PRET). The proposed PRET learns from a few examples during the inference phase without model fine-tuning, offering a flexible, scalable, and effective solution to recognize cancers across diverse organs, hospitals, and tasks using a single model only. Through extensive evaluations across international hospitals and diverse benchmarks, our method outperforms existing approaches across 20 tasks, achieving performances over 97% on 15 benchmarks at a maximum improvement of 36.76%...

[Preview Model] WSI Analysis

PRET: Achieving Pan-Cancer Recognition via a Few Examples Without Training

Yi Li, Ziyu Ning, Tianqi Xiang, Qixiang Zhang, Yi Min, Zhihao Lin, Feiyan Feng, Baozhen Zeng, Xuexia Qian, Lu Sun, Jiace Qin, Ling Xiang, Chao Fan, Tian Qin, Qian Wang, Xiu-Wu Bian, Qingling Zhang, Xiaomeng Li

Submitted to Nature Cancer Under Review. 2025

...In this paper, we introduce a novel paradigm, Pan-cancer Recognition via Examples without Training (PRET). The proposed PRET learns from a few examples during the inference phase without model fine-tuning, offering a flexible, scalable, and effective solution to recognize cancers across diverse organs, hospitals, and tasks using a single model only. Through extensive evaluations across international hospitals and diverse benchmarks, our method outperforms existing approaches across 20 tasks, achieving performances over 97% on 15 benchmarks at a maximum improvement of 36.76%...

[Preview Model] WSI Analysis

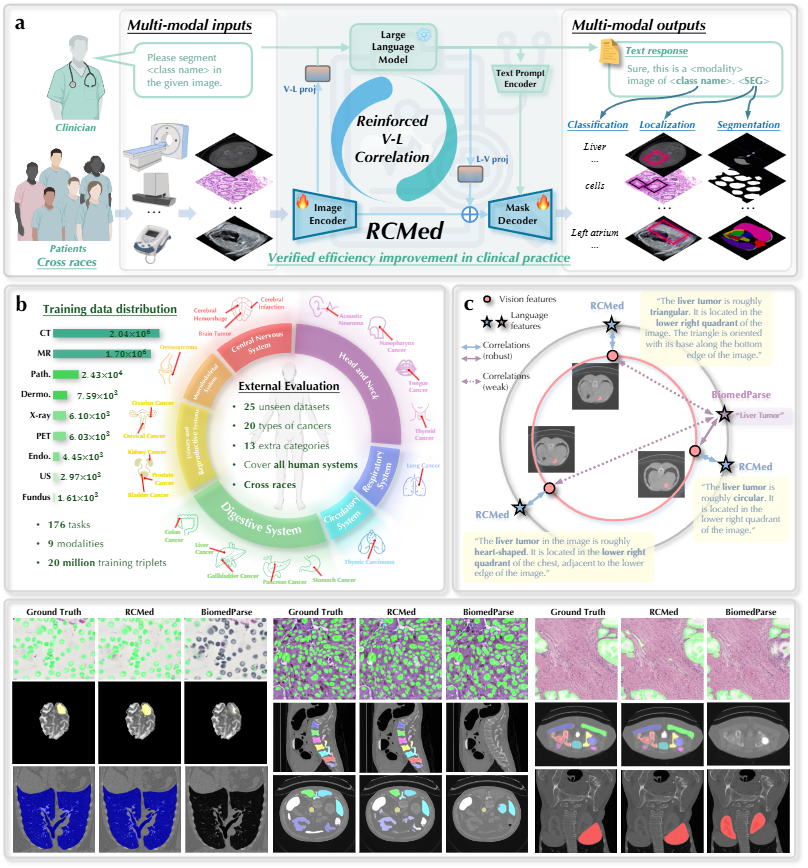

Reinforced Correlation Between Vision and Language for Precise Medical AI Assistant

Haonan Wang, Jiaji Mao, Lehan Wang, Qixiang Zhang, Marawan Elbatel, Yi Qin, Huijun Hu, Baoxun Li, Wenhui Deng, Weifeng Qin, Hongrui Li, Jialin Liang, Jun Shen, Xiaomeng Li

Submitted to Nature Communication Under Review. 2025

...we propose RCMed, a full-stack AI assistant enhancing multimodal alignment in both input & output, enabling precise anatomical delineation, accurate localization, and reliable diagnosis for clinicians through hierarchical vision-language grounding. Trained on a 20M images-mask-description triplets dataset, RCMed achieves SOTA precision in contextualizing irregular lesions and subtle anatomical boundaries, excelling across 165 clinical tasks with 9 different modalities...

[Preprint] [Demo] SegmentationMLLM

Reinforced Correlation Between Vision and Language for Precise Medical AI Assistant

Haonan Wang, Jiaji Mao, Lehan Wang, Qixiang Zhang, Marawan Elbatel, Yi Qin, Huijun Hu, Baoxun Li, Wenhui Deng, Weifeng Qin, Hongrui Li, Jialin Liang, Jun Shen, Xiaomeng Li

Submitted to Nature Communication Under Review. 2025

...we propose RCMed, a full-stack AI assistant enhancing multimodal alignment in both input & output, enabling precise anatomical delineation, accurate localization, and reliable diagnosis for clinicians through hierarchical vision-language grounding. Trained on a 20M images-mask-description triplets dataset, RCMed achieves SOTA precision in contextualizing irregular lesions and subtle anatomical boundaries, excelling across 165 clinical tasks with 9 different modalities...

[Preprint] [Demo] SegmentationMLLM

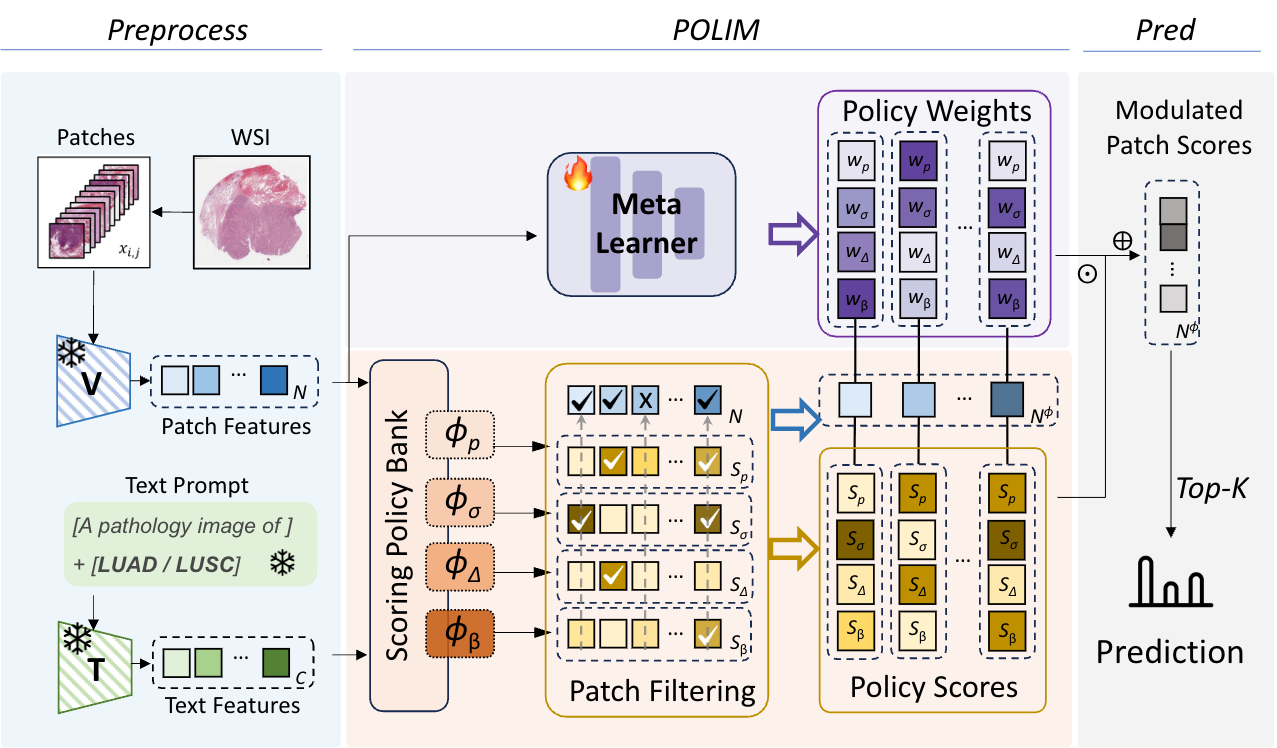

Few-Shot Lymph Node Metastasis Classification Meets High Performance on Whole Slide Images via the Informative Non-parametric Classifier

Yi LI, Qixiang ZHANG, Tianqi XIANG, Xiaomeng LI

International Conference on Medical Image Computing and Computer-Assisted Intervention (MICCAI) 2024

Few-Shot Lymph Node Metastasis Classification Meets High Performance on Whole Slide Images via the Informative Non-parametric Classifier

Yi LI, Qixiang ZHANG, Tianqi XIANG, Xiaomeng LI

International Conference on Medical Image Computing and Computer-Assisted Intervention (MICCAI) 2024

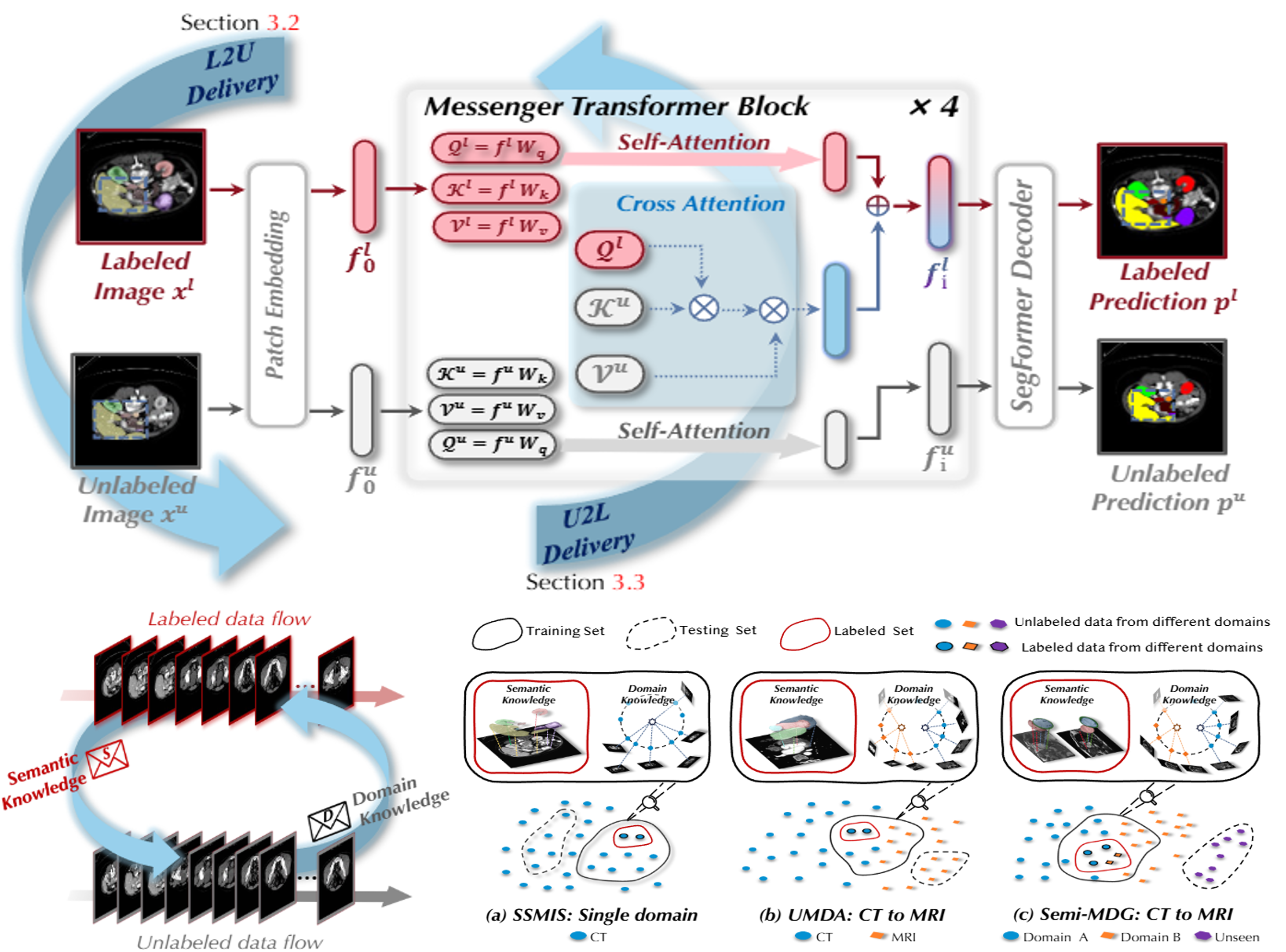

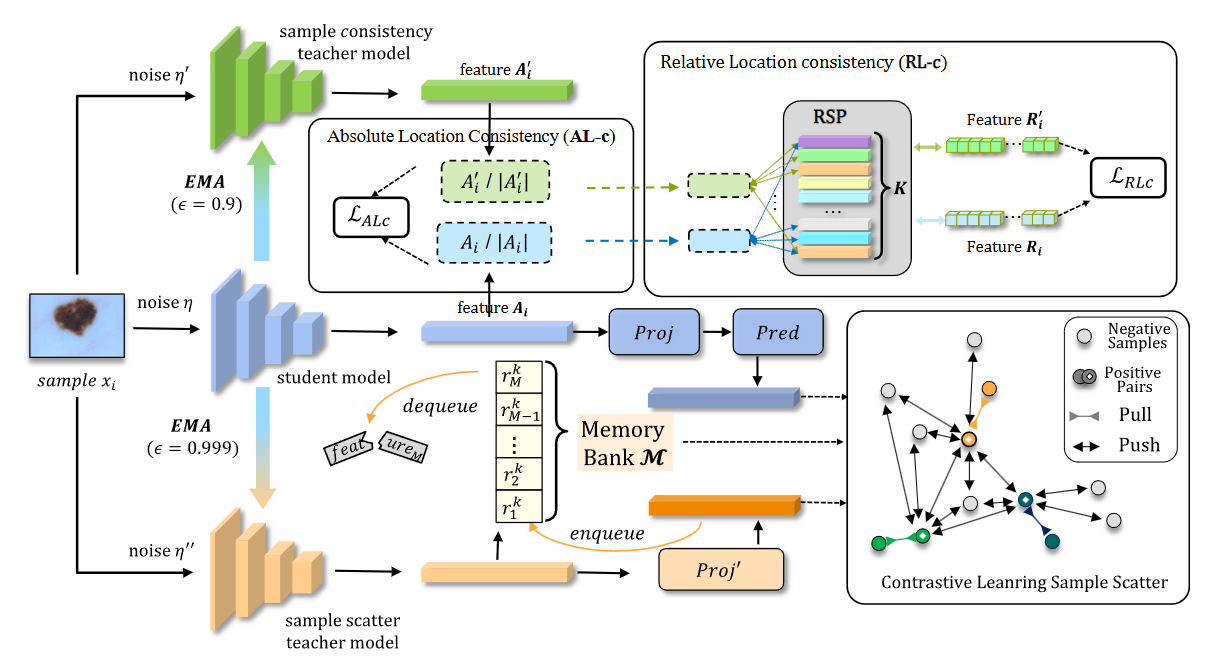

Dual Teacher Sample Consistency Framework for Semi-Supervised Medical Image Classification

Qixiang Zhang, Yuxiang Yang, Chen Zu, Jianjia Zhang, Xi Wu, Jiliu Zhou, Yan Wang

IEEE Transactions on Emerging Topics in Computational Intelligence (TETCI) 2023

[Paper] Segmentation

Dual Teacher Sample Consistency Framework for Semi-Supervised Medical Image Classification

Qixiang Zhang, Yuxiang Yang, Chen Zu, Jianjia Zhang, Xi Wu, Jiliu Zhou, Yan Wang

IEEE Transactions on Emerging Topics in Computational Intelligence (TETCI) 2023

[Paper] Segmentation

All publications

Professional Activities

Journal Reviews

- IEEE Transactions on Medical Image (TMI)

- Medical Image Analysis (MIA)

- IEEE Transactions on Neural Networks and Learning Systems (TNNLS)

- IEEE Journal of Biomedical and Health Informatics (JBHI)

- IEEE Transactions on Circuits and Systems for Video Technology (TCSVT)

Conference Reviews

- European Conference on Computer Vision (ECCV)

- Medical Image Computing and Computer Assisted Intervention (MICCAI)

Life Beyond AI

I’ve always believed in the motto: “The most precious gift God has ever given us is the world.” Beyond research, I’m passionate about water sports, including swimming (I’m a Level-2 athlete), surfing, windsurfing, and kayaking. I’m also deeply committed to global exploration and volunteer work. My travels have taken me to cities across countries such as China, Germany, Singapore, the United States, and Canada. For the past two years, I’ve spent my summer vacations in Sanya, China, volunteering as a coastal lifeguard, a meaningful experience I intend to continue in the years ahead. May god bless everyone, and may god bless me!